Getting Started with GPUs on Google Cloud: Everything You Need to Know

In today's data-driven landscape, organizations are increasingly turning to GPU-accelerated computing to power their most demanding workloads. Google Cloud Platform (GCP) offers robust GPU options that can dramatically accelerate artificial intelligence, machine learning, data analytics, scientific computing, gaming, and rendering tasks. This comprehensive guide walks you through everything you need to know about deploying and leveraging GPUs on Google Cloud.

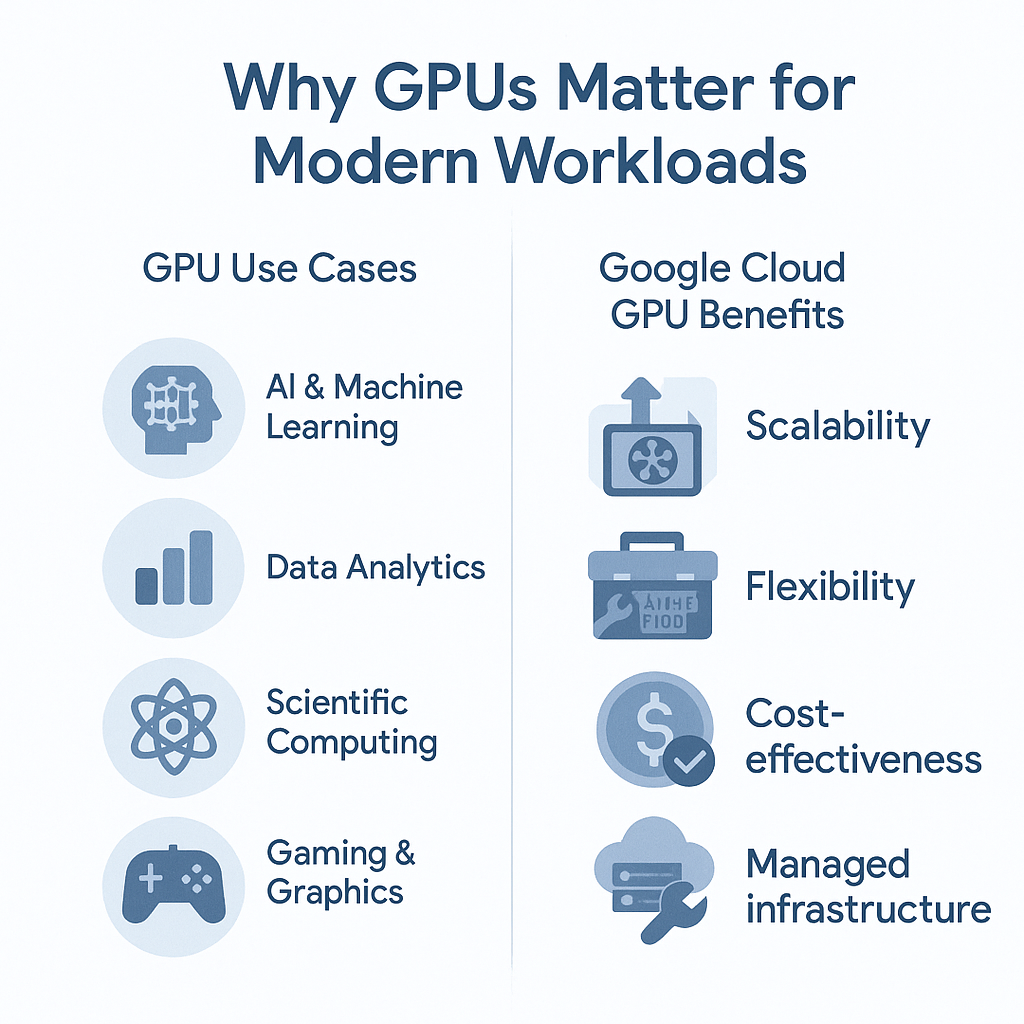

1. Why GPUs Matter in the Cloud

The computational demands of modern applications—particularly in AI, machine learning, and gaming—have outpaced what traditional CPU-only architectures can efficiently deliver. GPUs (Graphics Processing Units) excel at parallel processing tasks, offering significant performance advantages for specific workloads:

- • AI and Machine Learning : Training complex neural networks can be up to 100x faster on GPUs compared to CPUs

- • Data Analytics : Process and visualize massive datasets with dramatically improved performance

- • Scientific Computing : Accelerate simulations, modeling, and research computations

- • Gaming and Graphics : Enable high-quality, responsive gaming experiences with realistic visual effects

- • Rendering and Visualization : Support real-time 3D rendering and complex visualizations

Google Cloud's GPU offerings provide these benefits while maintaining the cloud advantages you expect:

- • Scalability : Scale GPU resources up or down based on your computational needs

- • Flexibility : Choose from various GPU types optimized for different workloads

- • Cost-effectiveness : Pay only for what you use, avoiding heavy capital investment in hardware

- • Managed infrastructure : Focus on your applications rather than maintaining physical GPU servers

2. Understanding Google Cloud GPU Options

Google Cloud offers several GPU options to match different performance needs and budget considerations. These can be categorized into three performance tiers:

Economy Tier

NVIDIA T4 GPUs on N1 VMs

Best for : Cost-effective inference, light ML training, general game development

Features : 16GB memory, good for general-purpose compute

Ideal workloads : Development environments, smaller ML models, light gaming applications

Mid-Range Tier

G2 VMs with NVIDIA L4 GPUs

Best for : Balanced performance and cost efficiency

Features : Optimized for inference and computer vision workloads

Ideal workloads : Video transcoding, computer vision applications, graphics rendering

NVIDIA P4, P100 GPUs (on select VM types)

Best for : Higher-end graphics processing and moderate to high-grade gaming

Features : Good balance of compute power and memory

Ideal workloads : Higher-end graphics, moderate AI workloads

High-Performance Tier

A2 VMs with NVIDIA A100 GPUs

Best for : High-performance AI training and inference

Features : Third-generation Tensor Cores, up to 80GB of memory per GPU

Ideal workloads : Deep learning model training, high-performance computing, complex data science

A3 VMs with NVIDIA H100 or H200 GPUs

Best for : High-performance computing and the most demanding AI workloads

Features : Fourth-generation Tensor Cores, up to 80GB of HBM3 memory

Ideal workloads : Foundation model training, complex simulations, advanced gaming with ray tracing

A4 VMs with NVIDIA B200 GPUs

Best for : Large-scale AI training and inference workloads

Features : Latest NVIDIA Blackwell architecture with significant performance improvements

Ideal workloads : Large language models, diffusion models, and enterprise AI applications

3. Key Considerations When Using GPUs on Google Cloud

Region and Zone Selection

GPU availability varies significantly across Google Cloud's global infrastructure:

- • High-availability regions : us-central1, us-east4, us-west1, and europe-west4 typically offer the broadest selection of GPU types

- • Specialized GPU access : A3 VMs with H100 GPUs have limited availability and may require capacity planning

- • Quota considerations : GPU quotas are set per region and may need to be increased for large-scale deployments

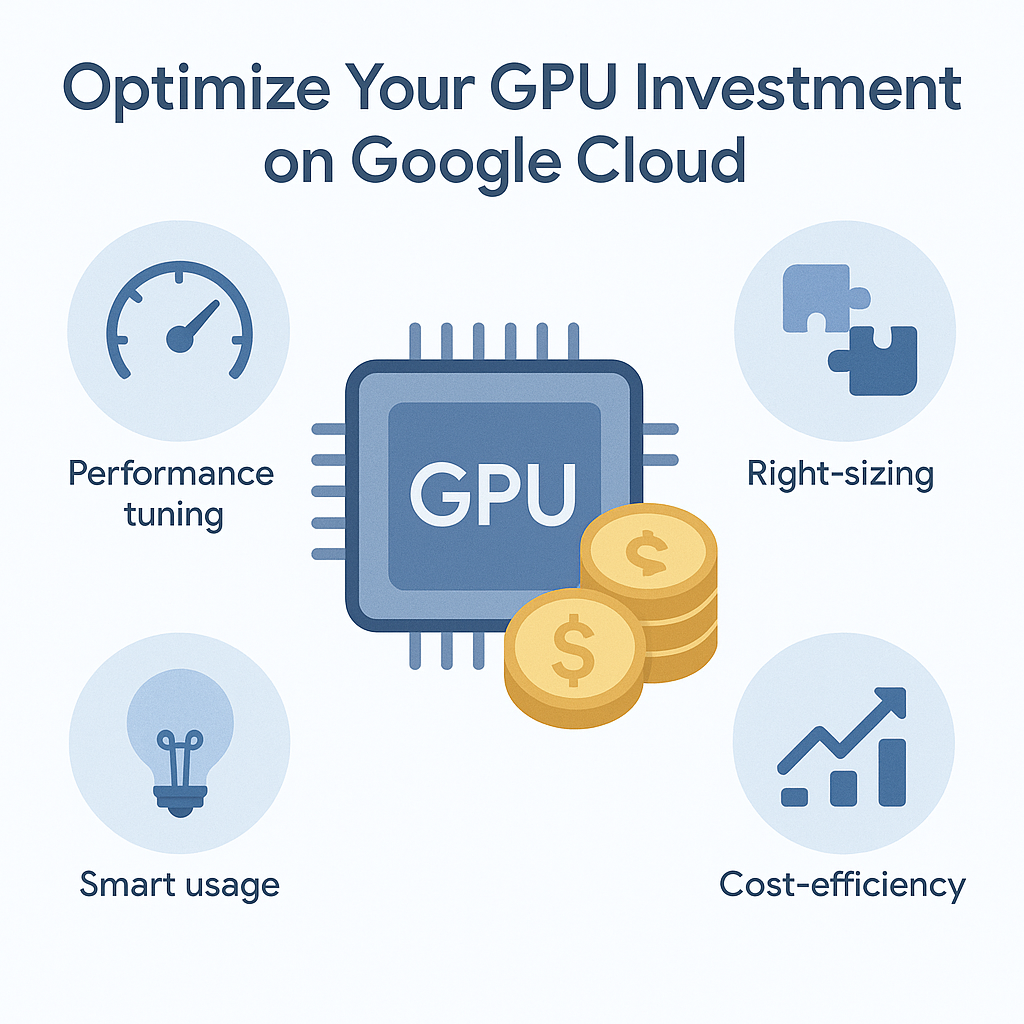

Performance Optimization

To maximize GPU performance on Google Cloud:

- • Right-sizing : Match your GPU type to your specific workload requirements

- • VM configuration : Pair GPUs with sufficient CPU and memory resources to prevent bottlenecks

- • Storage selection : Use high-performance SSD storage for data-intensive workloads

- • Networking : Consider placement for multi-GPU workloads to minimize latency

Security Considerations

When implementing GPU workloads:

- • VM isolation : GPU VMs follow the same security models as standard Compute Engine VMs

- • Network security : Implement appropriate firewall rules and VPC configurations

- • Image security : Use verified VM images with up-to-date drivers and security patches

- • Access control : Implement least-privilege IAM policies for GPU resource management

4. Popular GPU Use Cases on Google Cloud

Machine Learning and AI

Model training: Accelerate training of deep neural networks

Inference: Deploy trained models for low-latency prediction

Large language models: Power foundation models with specialized GPUs

Computer vision: Process and analyze visual data efficiently

High-Performance Computing

Scientific simulations: Accelerate molecular dynamics and modeling

Financial modeling: Run complex risk assessment models

Genomics research: Speed up DNA sequencing and analysis

Energy research: Accelerate seismic analysis and simulation

Gaming and Interactive Media

Game servers: Host high-performance game rendering engines

Graphics rendering: Create convincing and realistic game graphics

AI-driven features: Support advanced NPC behavior

Ray tracing: Enable advanced lighting effects

Media and Entertainment

Rendering: Transform 3D scenes into 2D images

Video transcoding: Convert media files between formats

Live streaming: Enable real-time video processing

Content creation: Power creative tools for designers

5. Cost Management and Optimization

GPU instances represent a significant investment, so it's essential to optimize your usage:

Understanding GPU Pricing

| GPU Type | On-demand Pricing |

|---|---|

| A4 VMs with NVIDIA B200 | $8-12 per GPU per hour |

| A3 VMs with NVIDIA H100 | $5-8 per GPU per hour |

| A2 VMs with NVIDIA A100 | $3-5 per GPU per hour |

| G2 VMs with NVIDIA L4 | $0.60-0.75 per GPU per hour |

| T4 GPUs on N1 | $0.35-0.45 per GPU per hour |

- • Commitment discounts : Save 20-50% with 1-3 year commitments

- • Spot pricing : Save 60-91% with preemptible instances for fault-tolerant workloads

Note : Exact pricing varies by region and commitment level. Check the Google Cloud pricing calculator for current rates.

6. Best Practices for GPU Deployment

Workload-Specific Optimizations

For AI/ML workloads

- • Use distributed training frameworks for multi-GPU setups

- • Implement mixed precision training when appropriate

- • Optimize batch sizes based on GPU memory

- • Consider GPU memory bandwidth alongside compute power

For HPC workloads

- • Implement efficient data transfer patterns

- • Utilize GPU Direct for faster I/O

- • Consider memory hierarchy in algorithm design

- • Profile workloads to identify bottlenecks

For rendering and visualization

- • Optimize scene complexity for available memory

- • Use GPU-specific rendering optimizations

- • Balance CPU preprocessing with GPU rendering

- • Implement progressive rendering for interactive applications

7. Conclusion

Getting started with GPUs on Google Cloud opens up tremendous possibilities for accelerating your computational workloads. From deep learning and AI to scientific computing and rendering, these powerful accelerators can transform what's possible in the cloud.

By understanding the available options and implementing cost optimization strategies, you can leverage the full power of GPUs while maintaining control over your cloud spending.

Ready to take your cloud applications to the next level? Start exploring GPU-accelerated computing on Google Cloud today!

Additional Resources

- • Google Cloud GPU Documentation

- • NVIDIA GPU Cloud (NGC) Catalog

- • Google Cloud Deep Learning VM Images

- • Google Cloud Vertex AI for managed ML with GPU acceleration